Transformer models: Encoder-Decoders

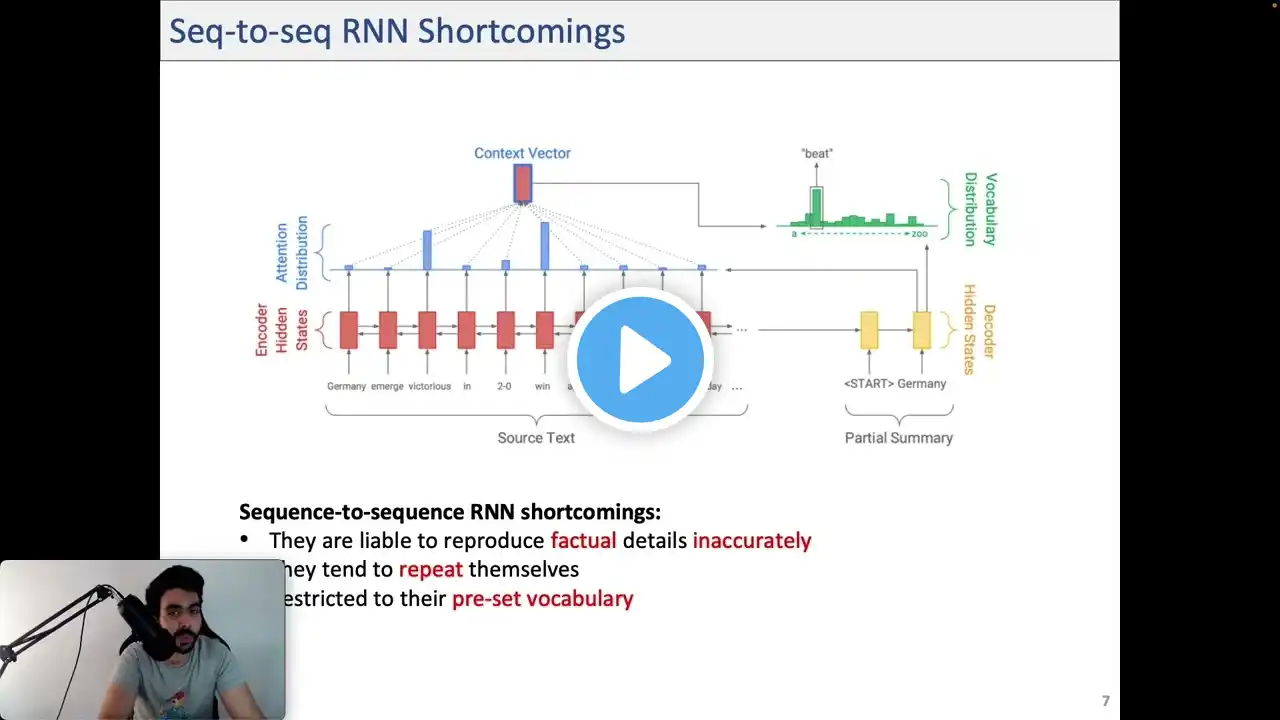

A general high-level introduction to the Encoder-Decoder, or sequence-to-sequence models using the Transformer architecture. What is it, when should you use it? This video is part of the Hugging Face course: http://huggingface.co/course Related videos: The Transformer architectutre: • The Transformer architecture Encoder models: • Transformer models: Encoders Decoder models: • Transformer models: Decoders To understand what happens inside the Transformer network on a deeper level, we recommend the following blogposts by Jay Alammar: The Illustrated Transformer: https://jalammar.github.io/illustrate... The Illustrated GPT-2: https://jalammar.github.io/illustrate... Understanding Attention: https://jalammar.github.io/visualizin... Furthermore, for a code-oriented perspective, we recommend taking a look at the following post: The Annotated Transformer, by Harvard NLP https://nlp.seas.harvard.edu/2018/04/... Have a question? Checkout the forums: https://discuss.huggingface.co/c/cour... Subscribe to our newsletter: https://huggingface.curated.co/